Teddy Roland has joined the Digital Humanities at Berkeley staff as a consultant. He is a 20th century Americanist, studying poetry and poetics. He holds a master’s degree from the University of Chicago, where he worked on circuits of capital in Modernism as they relate to the rise of free verse. He recently served as co-instructor for the Digital Humanities at Berkeley Summer Institute’s “Computational Text Analysis” class.

Teddy Roland has joined the Digital Humanities at Berkeley staff as a consultant. He is a 20th century Americanist, studying poetry and poetics. He holds a master’s degree from the University of Chicago, where he worked on circuits of capital in Modernism as they relate to the rise of free verse. He recently served as co-instructor for the Digital Humanities at Berkeley Summer Institute’s “Computational Text Analysis” class.

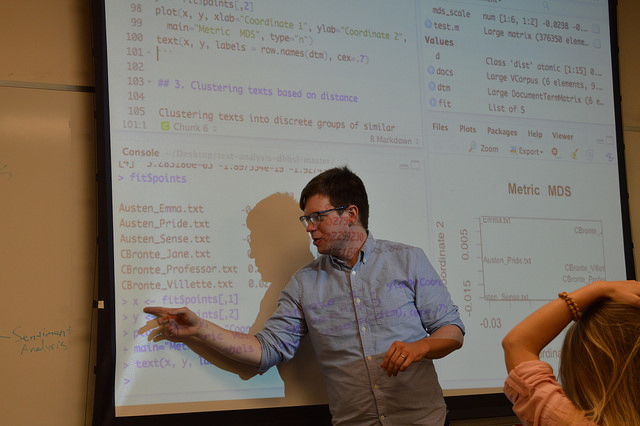

As a DH consultant, Teddy is working with the Literature and Digital Humanities Working Group (Lit+DH) to develop workshops on computational text analysis for literary studies. On September 29th, he will introduce working group members to the Natural Language Tool Kit, a popular Python library for text analysis. Over the course of the semester, he will take a project-oriented approach to introducing a few tools from the R programming language. He will also walk through some of the prerequisite steps for ingesting text into these tools.

For scholars looking to pick up these tools, Teddy recommends learning with a specific task in mind. Though he did take a class on network analysis, Teddy describes the summer he learned how to webscrape with Python as the true catalyst for his interest in the digital humanities. After graduating from UChicago, Teddy began working as a research assistant for Professors Richard So and Hoyt Long, where he was tasked with compiling and formatting metadata for 1000 American books published between 1880 and 1923. He quickly realized how long it would take to do this process by hand (copying entries from WorldCat, pasting them into Excel, and formatting the metadata appropriately). Having no programming experience, Teddy convinced the professors that dedicating time to learning basic programming would speed up the process considerably. Focusing exclusively on learning Python through codecademy’s free tutorials, Teddy was able to build a rudimentary scraper in two weeks. “The scripts I wrote are not elegant, especially when I look back at it now. But I came away from the experience feeling empowered,” Teddy shared. “I’ve read English papers from the 1930s and 40s where professors thank their small army of grad students who spent a year of their life counting syllables in Paradise Lost. Now, we can do a lot of that work with programming. I performed the task I was given in two weeks; it would have taken 6 months to do by hand.” Teddy then spent the summer performing more scraping tasks for the professors, reusing bits of code, refining his scripts, picking up Python packages, and learning how troubleshoot.

Teddy pointed out that instructors or tutorial designers often underestimate the social aspects of learning how to use these tools, set up the environment necessary to run them, and move data back and forth between tools and formats. “Codecademy teaches you the language, but it doesn’t teach you how to program.” After learning the syntax, Teddy recalled his next question was where to actually run the code on your computer. “Now I know: I had to download a Python interpreter and learn how to use the console. I didn’t know what versions of Python to use, I didn’t know why some packages were incompatible with Python 3. But I didn’t have that language at the time! I didn’t know what questions to ask; I didn’t even know what to look for on Google.” Teddy encourages scholars interested in the digital humanities to stay abreast of scholarly blogs and Twitter, where scholars test out new methods, experiment, and exchange critique.

Visit the Literature and DH Working Group’s website for more information about upcoming training. Interested in what else NLTK can do? Read our blog on Kyle Booten, who teaches undergraduates to author electronic poetry in Python. See our resource guides for more information about computational text analysis.