Researchers and instructors at the Center for New Music and Audio Technology (CNMAT) are expanding their undergraduate course offerings in computer music, building upon decades of interdisciplinary research, performance, tool development, and teaching. DH Fellow Edmund Campion, Professor of Composition in the Music Department and Co-Director of CNMAT, and other instructors at CNMAT have designed curriculum around computer music and digital sound. With the support of two course development grants from DH at Berkeley, the revised introductory course “Music 158A: Sound and Music Computing” and a new course, “Music 158B: Situated Instrument Design for Musical Expression” will engage students in what it means to be musical in a digital context. Using CNMAT’s ODOT software library and Max MSP, a visual programming language for media, students are able to quickly manipulate sound, gesture, and other forms of time-bound data to model and synthesize sound.

Over the years, Music 158 has drawn students from disciplines such as music, philosophy, computer science, cognitive science, and more. Course instructor Rama Gottfried, PhD student in Music and New Media, explained that the class helps students from these different disciplines arrive at a similar foundation for exploring computer music. “Computer science and engineering students receive more experience with humanistic analysis of music. They come to understand sound and musical thinking so they can apply their technical skills with more aesthetic knowledge and insight. Students from humanistic disciplines are much more attuned to analyzing what they’re doing and why they’re doing it. In this class they gain more experience with programming and other technical skills.”

In “Music 158B: Situated Instrument Design for Musical Expression”, students use these foundational skills to further explore integrated technology and art making through instrument design. Students use technology to analyze, model, and synthesize sound. In lab, students utilize cameras, multi-touch tablets, accelerometers, microphones, and breath sensors as inputs for their instruments. As assignments, students will receive musical challenges like creating a sense of counterpoint while utilizing a particular type of sensing. Through these hands-on experiments, students interrogate the expressive consequences of their decisions. “Each of these modes of interaction have different expressive characteristics,” Gottfried said. “Students make explicit decisions about which types of sensing and interaction to use. These decisions affect the experience of performing and the end sonic result.”

“Materiality is an effect of technology upon everything,” Gottfried shared. Digital music, mediated through headphones and speakers, must take these capabilities into account. “Digital music has its own parameters, and students don’t know the limits until they do some experimentation.”

“Materiality is an effect of technology upon everything,” Gottfried shared. Digital music, mediated through headphones and speakers, must take these capabilities into account. “Digital music has its own parameters, and students don’t know the limits until they do some experimentation.”

Learn more about other digital humanities course offerings in Spring 2016.

Images:

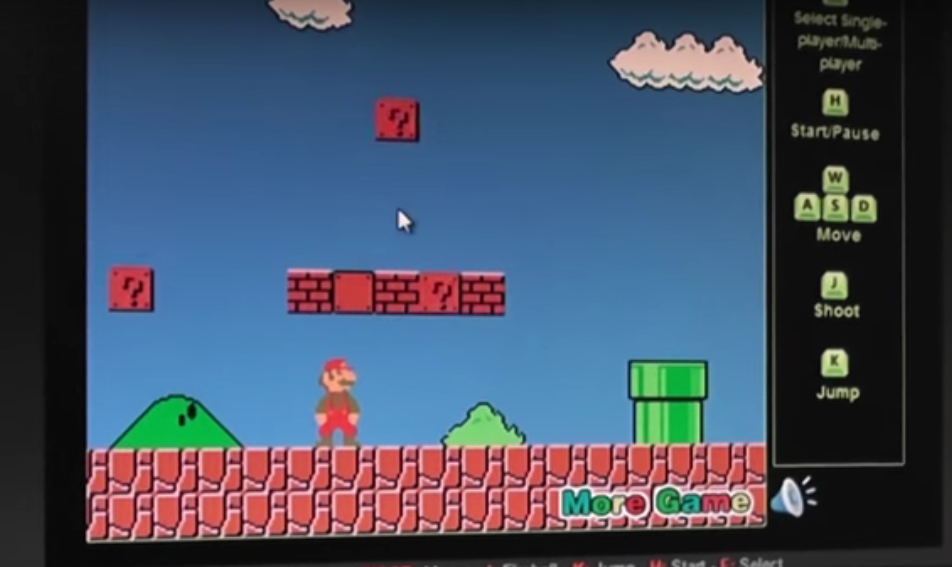

(1) Music 158B in session

(2) A screenshot from a 158A student project, where a video game character's movements are controlled by voice and changes in pitch. See the demo on YouTube.